Search the web from Google Sheets

Search the web using GPT_WEB

Use the GPT_WEB function to search the web in real time and get up-to-date information. It should be used whenever you ask questions about events, facts, people, companies for which freshness and accuracy is important. It is built on Perplexity AI's online model.

Safe mode is available with GPT_WEB, allowing you to avoid most timeouts and saving responses in the formula's last parameter. This prevents data loss and avoids extra costs during automatic Sheets refreshes.

Perform general searches

Prerequisites

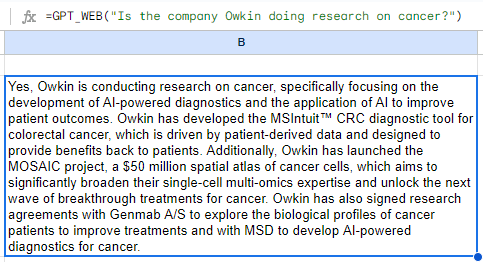

The GPT_WEB function enables you to ask direct questions and get up-to-date answers based on a web search:

=GPT_WEB("Is the company Owkin doing research on cancer?")

Perform targeted searches

Prerequisites

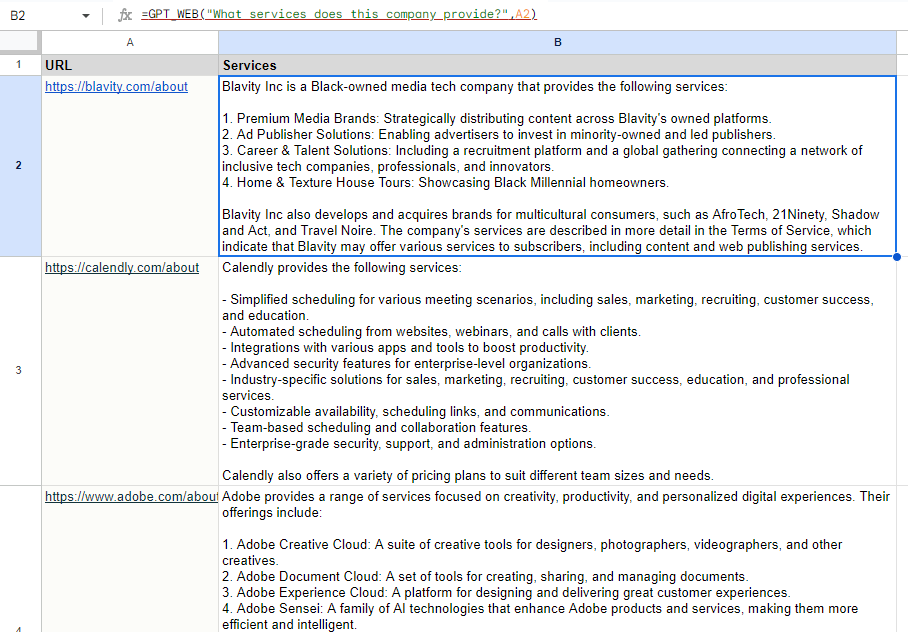

Provide a specific URL from which the information must be retrieved:

=GPT_WEB("What services does this company provide?", A2)

The URL must start with http://, or https://.

Scrape web pages

Import web content directly from a Google spreadsheet by installing a script and providing the URLs to scrape.

Prerequisite: Install the script in your spreadsheet

Install the scraping script in your spreadsheet to enable the import of web page content.

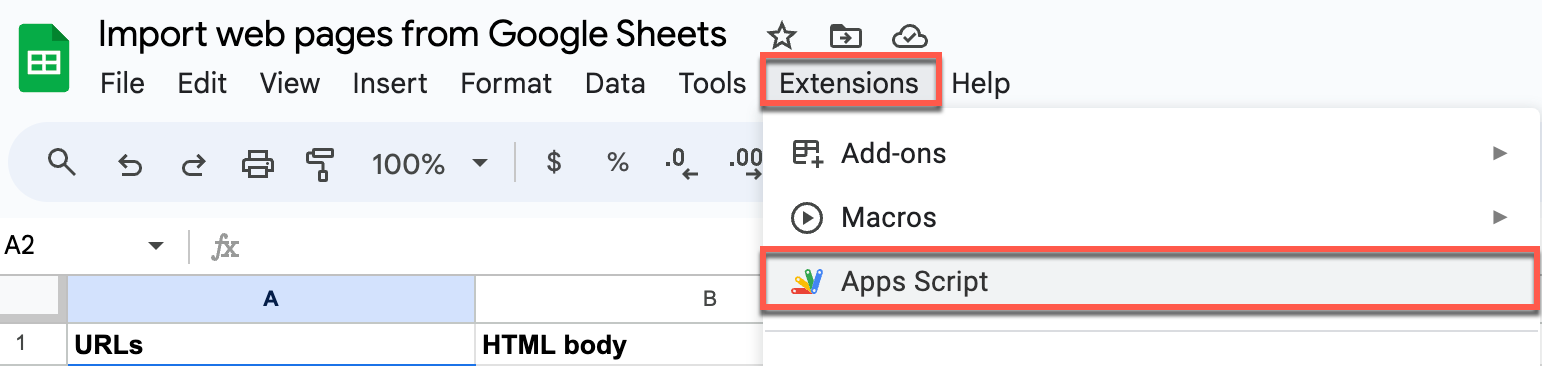

From the Extensions menu in your spreadsheet, click Apps Script.

A new project opens on the Apps Script page.

Copy and paste the script into the script editor.

/**

* @OnlyCurrentDoc

*/

const SHEETS_CELL_LIMIT = 50000;

function onOpen() {

SpreadsheetApp

.getUi()

.createMenu("Scrape")

.addItem("Scrape URLs in column A", "ScrapeAB")

.addToUi()

}

/**

* @param {"https://en.wikipedia.org/wiki/ChatGPT"} url The url to scrape

* @customfunction

*/

function GPT_SCRAPE(url) {

const res = UrlFetchApp.fetch(url);

if (res.getResponseCode() >= 300) {

return `Error: response code ${res.getResponseCode()}`;

}

const html = res.getContentText();

const text = cleanup_html(html);

// html.substring(0,SHEETS_CELL_LIMIT),

return text.substring(0,SHEETS_CELL_LIMIT);

}

function cleanup_html(html) {

// remove tags

const tag_free = html

.replace(/<style([\s\S]*?)<\/style>/gi, "")

.replace(/<script([\s\S]*?)<\/script>/gi, "")

.replace(/<[^>]+>/g, "");

// replace html entities

let decoded = tag_free;

try {

const xml = XmlService.parse('<d>' + tag_free + '</d>');

decoded = xml.getRootElement().getText();

} catch (e) {

// unable to decode some HTML entity

}

// remove repeated spaces

const clean = decoded

.replace(/\n\s*\n/gi, "\n")

.replace(/ /gi, " ")

.trim();

return clean;

}

function ScrapeAB() {

const range = SpreadsheetApp.getActiveSpreadsheet().getRange("A:B");

const values = range.getValues().slice(1)

let urls = 0

let done = 0

for (const row of values) {

const url = row[0];

const res = row[1];

if (!url) continue;

urls++;

if (!res) continue;

done++;

}

if (urls === done) {

SpreadsheetApp.getUi().alert(`Found ${urls} urls, ${done} already scraped. Nothing to be done.`)

return;

}

SpreadsheetApp.getUi().alert(`Found ${urls} urls, ${done} already scraped. Scraping ${urls - done}...`)

for (let irow = 0; irow < values.length; irow++) {

row = values[irow];

const url = row[0];

const res = row[1];

if (!url || res) continue;

const text = GPT_SCRAPE(url)

range.getCell(irow+2, 2).setValue(text);

}

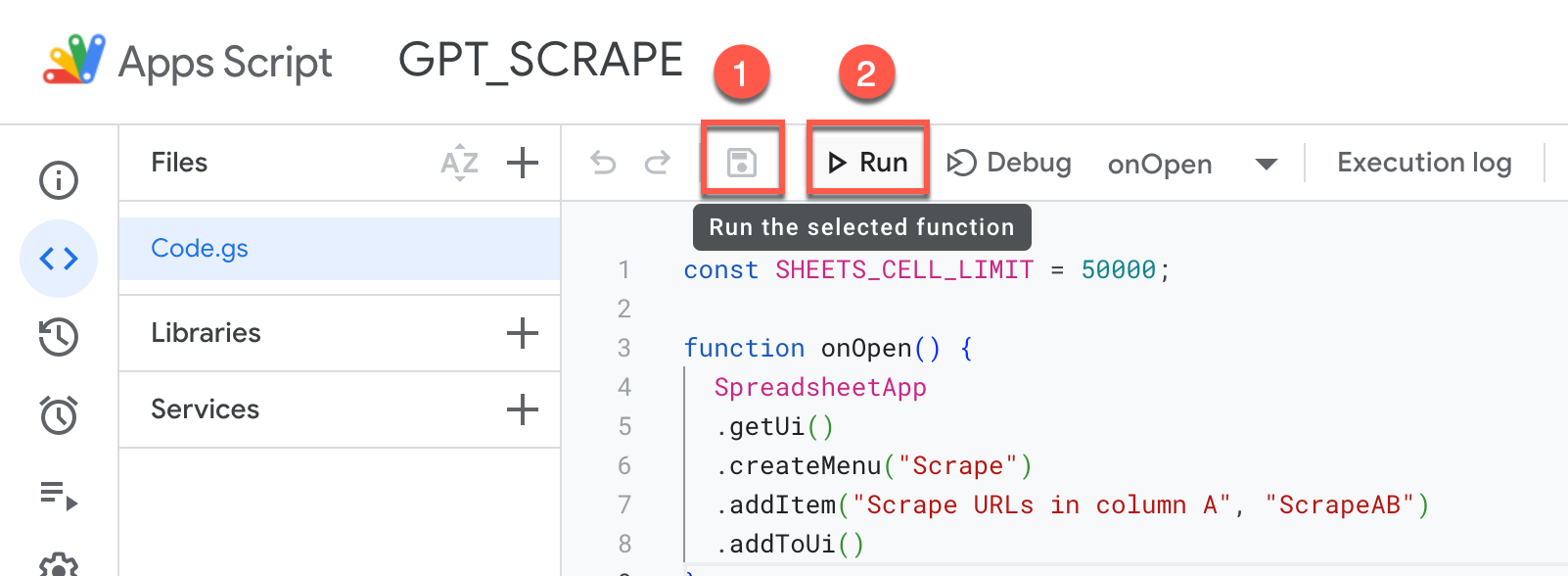

}Save the project and click Run.

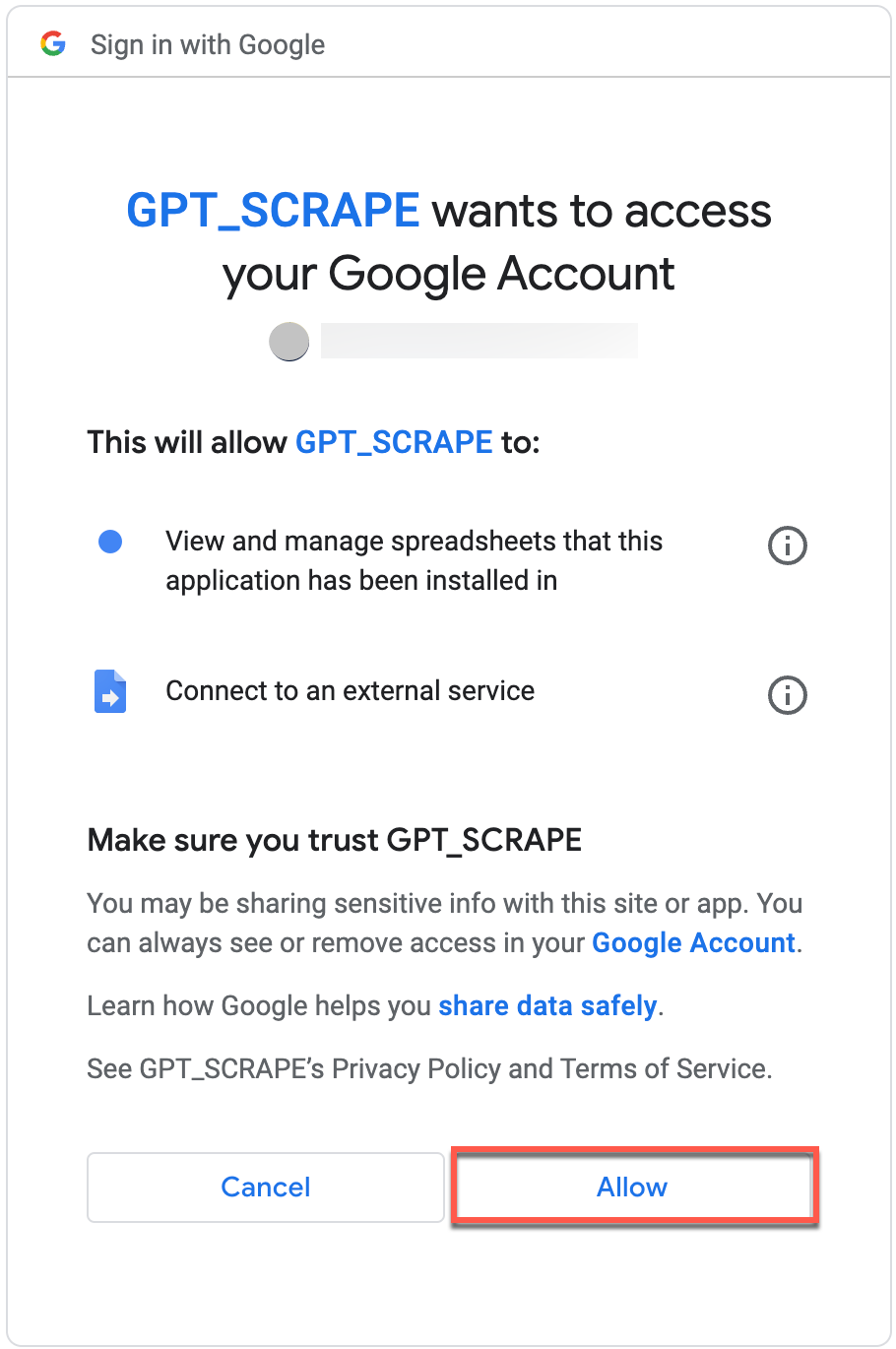

An Authorization required pop-up appears.

Click Review permissions to accept the necessary access rights for the script.

You are prompted to select a Google account.

Choose the account you are using for the spreadsheet. This ensures the script works correctly within your document.

Click Allow to grant these permissions.

The following permissions are necessary for the script to run:

- View and manage spreadsheets that this application has been installed in: Required for the script to specifically interact with the spreadsheets where it's installed, whether it's adding, modifying, or removing data.

- Connect to an external service: Required for the script to browse web pages and import the content to your Google Sheets.

You can now scrape multiple URLs at once in your spreadsheet or scrape a single URL using GPT_SCRAPE.

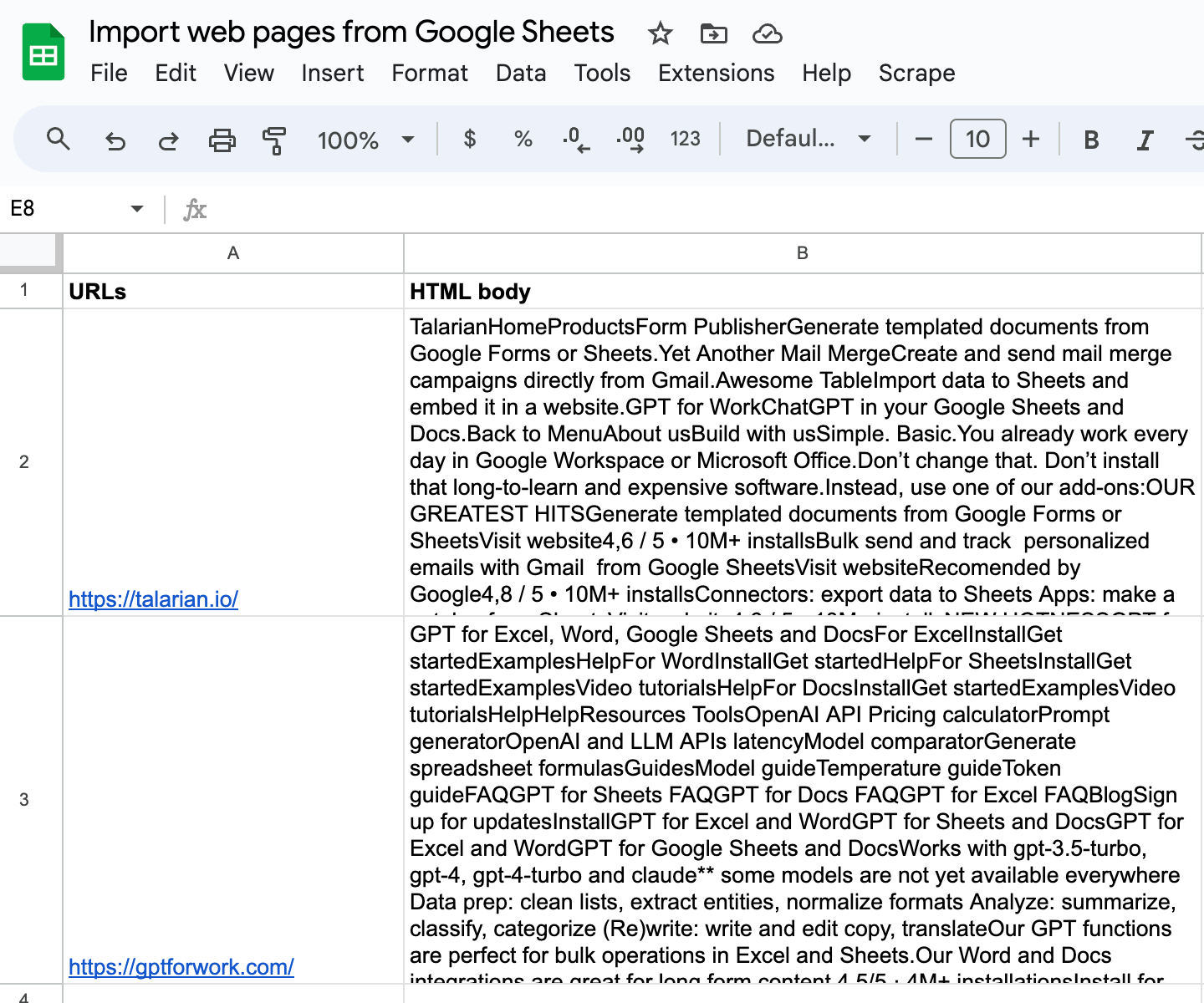

Scrape multiple URLs at once in your spreadsheet

After installing the script, your spreadsheet will display a new Scrape menu.

Prerequisites

Select Scrape > Scrape URLs in column A.

A confirmation pop-up appears.

Click OK to launch the script.

This script processes the web page URLs in column A, and write the scraped content into column B, ready for you to edit directly. It removes complex codes and styles, leaving only text, and includes the page's title and main body text.

Scrape a single URL using GPT_SCRAPE

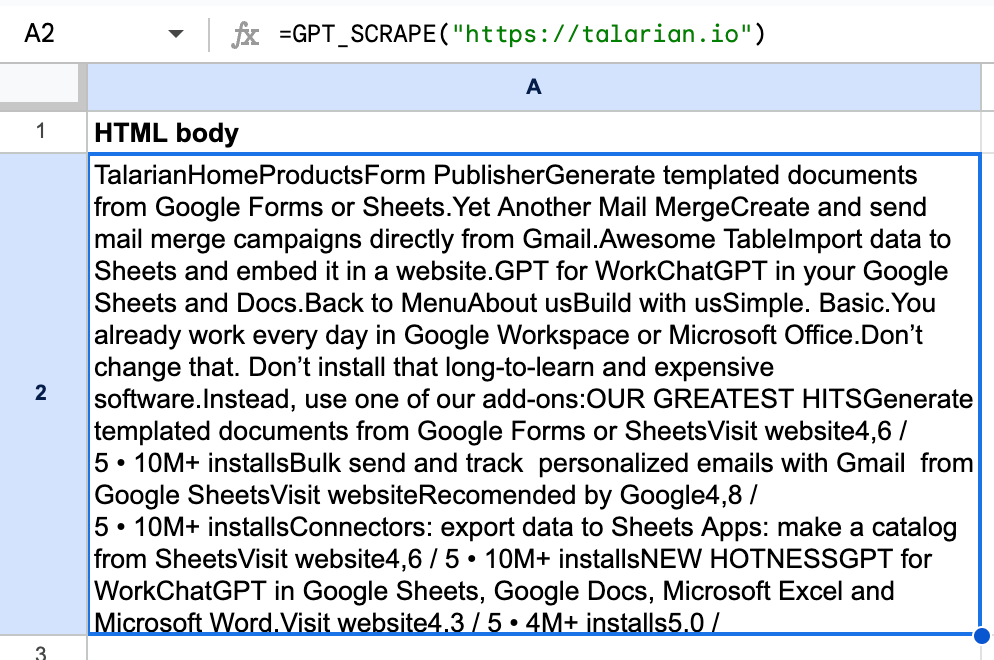

Once the script is installed in the spreadsheet, you can use the GPT_SCRAPE function.

Using the GPT_SCRAPE function does not affect your balance in GPT for Sheets.

Type a GPT_SCRAPE formula into a cell, for example =GPT_SCRAPE("https://talarian.io"), and press Enter to generate the result:

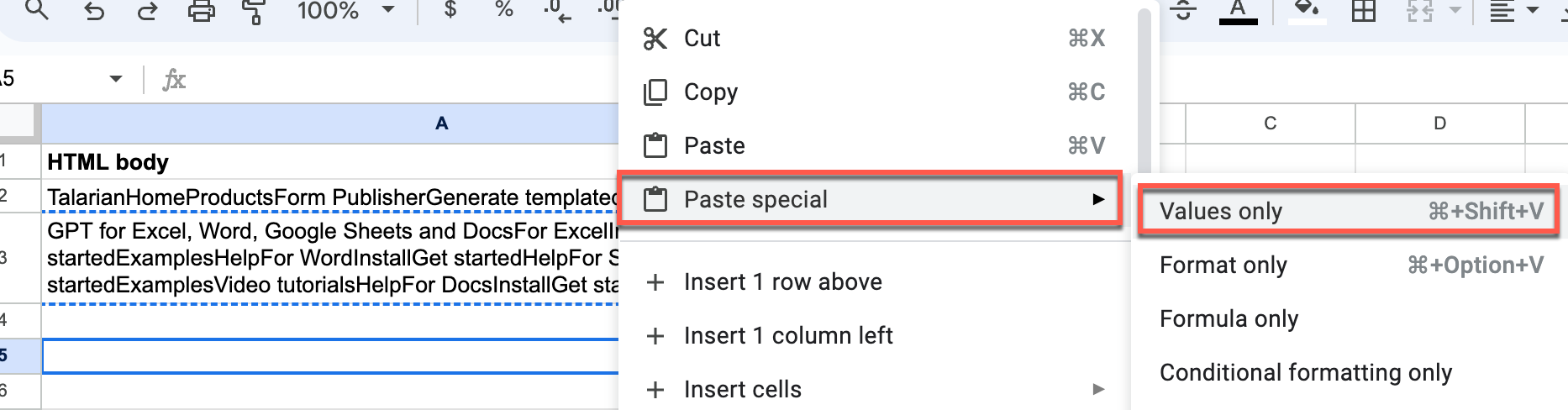

This function browses content from the specified web page and imports it into the cell. To edit a cell's content, copy the cell and use Paste special, and Values only for pasting.

Limitations

Note the following limitations of the web scraping script:

- Caution is advised when scraping content from web pages to adhere to website policies and prevent server overload. Spacing out requests can prevent issues such as IP blocking or service restrictions.

- Each import is capped at 50,000 characters of text. Content beyond this limit will be truncated.

- Web pages heavily reliant on JavaScript might not be fully captured by this script.

- This script is optimized for HTML content. It may not perform optimally with APIs that return structured data, such as JSON.

Analyze, rewrite, translate or extract data from the content you have just imported, using other GPT functions.